9.3. Fitting a function to data with nonlinear least squares

This is one of the 100+ free recipes of the IPython Cookbook, Second Edition, by Cyrille Rossant, a guide to numerical computing and data science in the Jupyter Notebook. The ebook and printed book are available for purchase at Packt Publishing.

This is one of the 100+ free recipes of the IPython Cookbook, Second Edition, by Cyrille Rossant, a guide to numerical computing and data science in the Jupyter Notebook. The ebook and printed book are available for purchase at Packt Publishing.

▶ Text on GitHub with a CC-BY-NC-ND license

▶ Code on GitHub with a MIT license

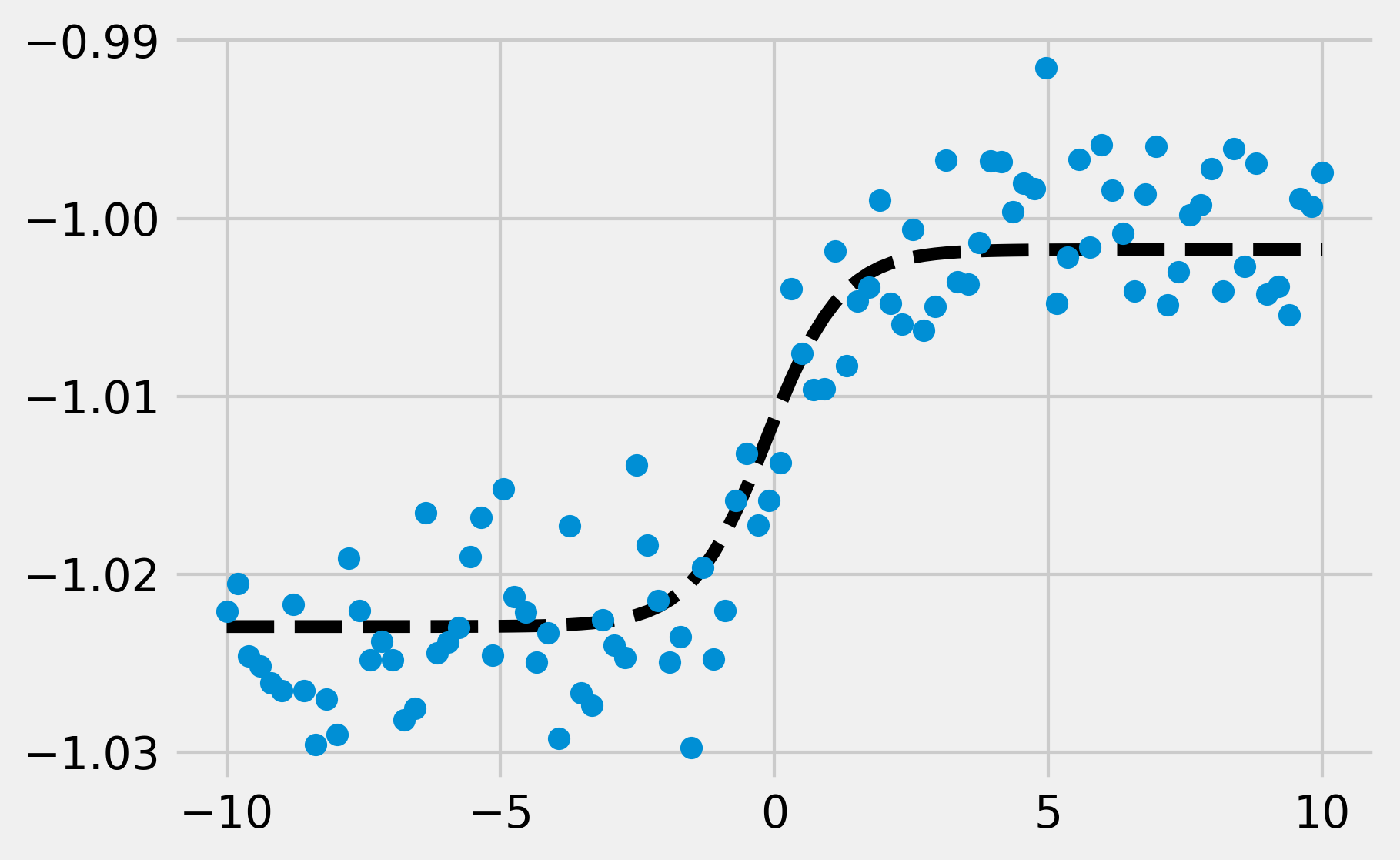

▶ Go to Chapter 9 : Numerical Optimization

▶ Get the Jupyter notebook

In this recipe, we will show an application of numerical optimization to nonlinear least squares curve fitting. The goal is to fit a function, depending on several parameters, to data points. In contrast to the linear least squares method, this function does not have to be linear in those parameters.

We will illustrate this method on artificial data.

How to do it...

1. Let's import the usual libraries:

import numpy as np

import scipy.optimize as opt

import matplotlib.pyplot as plt

%matplotlib inline

2. We define a logistic function with four parameters:

def f(x, a, b, c, d):

return a / (1. + np.exp(-c * (x - d))) + b

3. Let's define four random parameters:

a, c = np.random.exponential(size=2)

b, d = np.random.randn(2)

4. Now, we generate random data points by using the sigmoid function and adding a bit of noise:

n = 100

x = np.linspace(-10., 10., n)

y_model = f(x, a, b, c, d)

y = y_model + a * .2 * np.random.randn(n)

5. Here is a plot of the data points, with the particular sigmoid used for their generation (in dashed black):

fig, ax = plt.subplots(1, 1, figsize=(6, 4))

ax.plot(x, y_model, '--k')

ax.plot(x, y, 'o')

6. We now assume that we only have access to the data points and not the underlying generative function. These points could have been obtained during an experiment. By looking at the data, the points appear to approximately follow a sigmoid, so we may want to try to fit such a curve to the points. That's what curve fitting is about. SciPy's curve_fit() function allows us to fit a curve defined by an arbitrary Python function to the data:

(a_, b_, c_, d_), _ = opt.curve_fit(f, x, y)

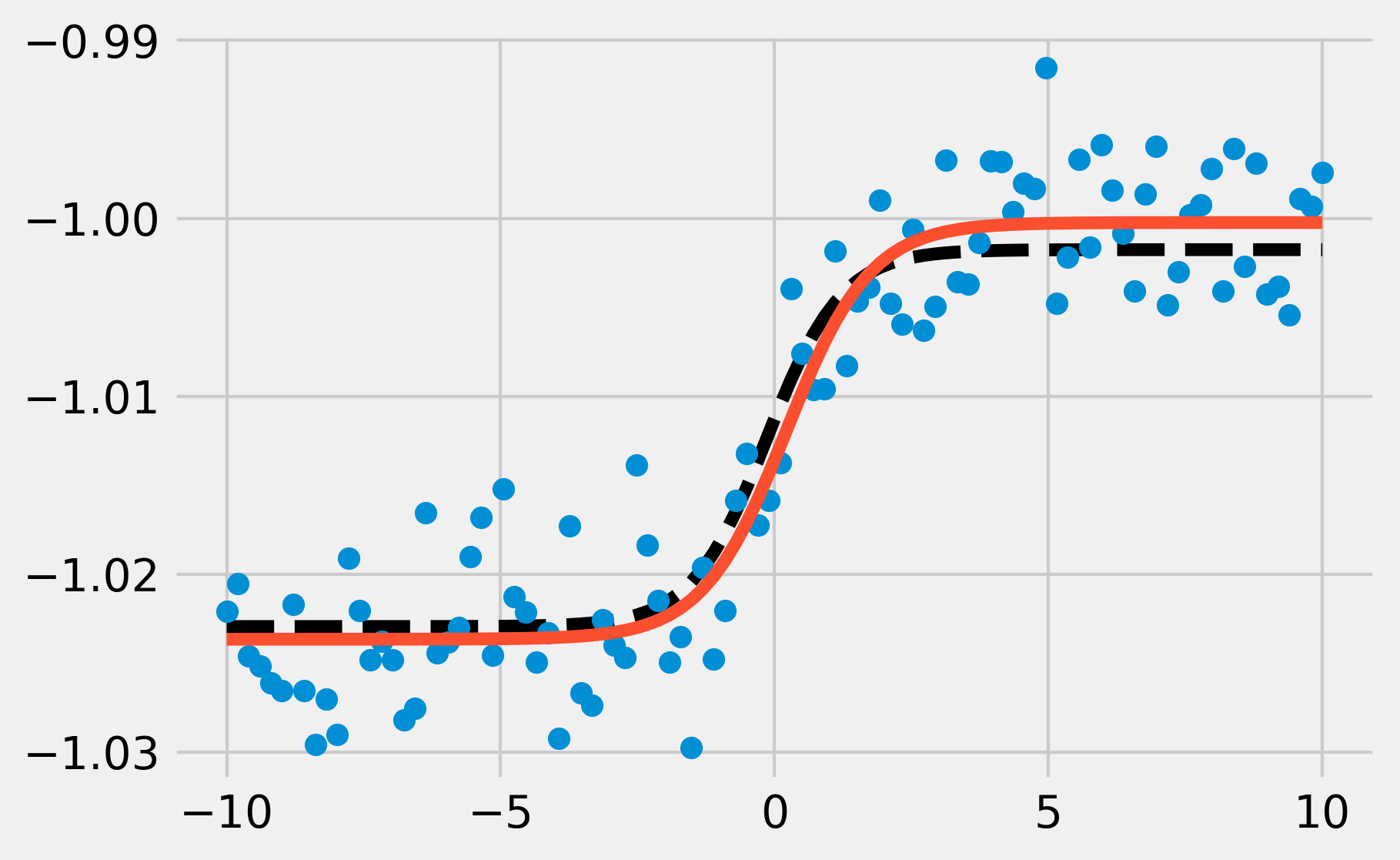

7. Now, let's take a look at the fitted sigmoid curve:

y_fit = f(x, a_, b_, c_, d_)

fig, ax = plt.subplots(1, 1, figsize=(6, 4))

ax.plot(x, y_model, '--k')

ax.plot(x, y, 'o')

ax.plot(x, y_fit, '-')

The fitted sigmoid appears to be reasonably close to the original sigmoid used for data generation.

How it works...

In SciPy, nonlinear least squares curve fitting works by minimizing the following cost function:

Here, \(\beta\) is the vector of parameters (in our example, \(\beta =(a,b,c,d)\)).

Nonlinear least squares is really similar to linear least squares for linear regression. Whereas the function \(f\) is linear in the parameters with the linear least squares method, it is not linear here. Therefore, the minimization of \(S(\beta)\) cannot be done analytically by solving the derivative of \(S\) with respect to \(\beta\). SciPy implements an iterative method called the Levenberg-Marquardt algorithm (an extension of the Gauss-Newton algorithm).

There's more...

Here are further references:

- Reference documentation of curvefit available at http://docs.scipy.org/doc/scipy/reference/generated/scipy.optimize.curve_fit.html

- Nonlinear least squares on Wikipedia, available at https://en.wikipedia.org/wiki/Non-linear_least_squares

- Levenberg-Marquardt algorithm on Wikipedia, available at https://en.wikipedia.org/wiki/Levenberg%E2%80%93Marquardt_algorithm

See also

- Minimizing a mathematical function